Speed at Scale: How General Purpose GPUs Can Revolutionize the Big Data Analytics Market

Try HeavyIQ Conversational Analytics on 400 million tweets

Download HEAVY.AI Free, a full-featured version available for use at no cost.

GET FREE LICENSEWhy would anyone want to build a GPU-accelerated analytics platform? Everyone knows that GPUs are great for high performance computing, deep learning AI and machine learning. But why are GPUs good for general purpose analytics?

Because the growth of data has become a very big problem when it comes to actually getting analytical insights.

Data volume is growing at a 40 percent year-over-year clip. That’s fast. And for some organizations, the data tsunami is much bigger. If you’re bringing in a lot of sensor data from mobile devices, aircraft, or automotives, your rate of data growth is above average, to say the least.

When it comes to analyzing this data, the old solution of scaling with more CPUs is over. CPUs are still getting faster to the tune of 10 to 20 percent every year, but that’s no match for the 40 percent growth rate of data.

In recent years, this gap has spawned all sorts of costly and ineffective workarounds. People are down-sampling. They’re pre-aggregating, or indexing every column. Or they’re scaling out to huge clusters of servers. Not so long ago, people would brag about the largess of their Hadoop-based data lake. But it’s not cool anymore (for good reason) to have thousand-node clusters just to perform basic queries on large datasets. These are all just attempts to cope — they still don’t enable insights into massive datasets, and they are simply economically unsustainable. (I’m yet to meet a CIO, CFO or CEO that wants to spend more money in future on hardware and data wranglers).

At MapD, every business and government agency we engage with is looking for a new way forward, and that’s where GPUs come in. GPUs, by leveraging thousands of processing cores, have become synonymous with speed, resulting in their conventional adoption for supercomputing, AI and deep learning processes. Even more crucial is how much faster they are getting. GPU performance is growing, on average, about 50 percent year-over-year, easily outpacing the growth rate of data. This makes the GPU ideal for next-generation platforms that accelerate analytics at scale.

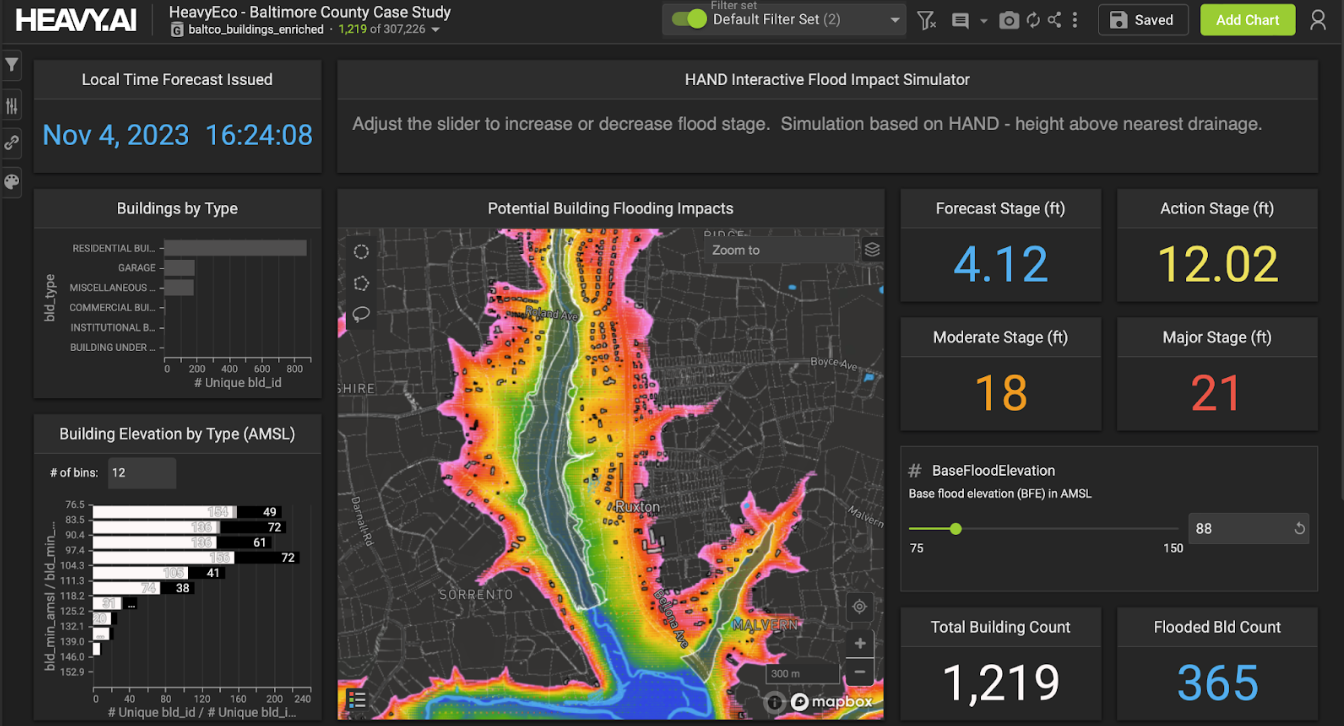

MapD originated from my research at MIT, when I was looking to use GPUs to solve extreme scale analytics problems at extreme speed. At the core of MapD we built an extremely fast, open source, SQL engine built to leverage the thousands of cores across multiple GPUs and multiple servers. This allows MapD to run native SQL queries across billions of records in milliseconds.

This power is not designed for mainstream, reporting-style, insights, such as seeing sales numbers or supply gaps. It is designed for people making real time decisions based on very large, high-velocity datasets. In these settings, analysts are explorers, often working under critical time pressures. They are immersed in a torrent of data, seeking anomalies, correlations, and causes. While they need near-instant response to their queries, near-instant visualization is equally important. Our rationale is that, while it’s great to query billions of rows in real time, what if you actually want to see all those data points plotted on a map, scatter plot, or network graph? Our human eyes and brains function with millisecond latency, so why should our analytics platform, built to feed our eyes and brains, be any slower?

MapD’s answer, again, is to use the GPU. GPU’s have terrific rendering capabilities, able to generate grain-level visualizations of massive datasets, in real time. This dramatically lowers the cost of curiosity for the analyst, by providing instant pivoting, drill downs, and cross-filtering, regardless of the data scale. This is a new paradigm of extreme analytics, impossible until now. Indeed, for analysts and data scientists, the first experience of MapD’s extreme speed at extreme scale can be jaw-dropping.

Our vision to build our platform leveraging GPUs is just a recent step in the rise of GPU-computing. Early on, enterprising researchers attempted to shoehorn compute workloads into the graphics shaders of GPUs. Then NVIDIA pioneered and launched CUDA in 2007. CUDA and GPU computing quickly took hold for scientific workloads. More recently, the field of deep learning has emerged, almost singularly, due to the new computational possibilities enabled by GPU acceleration. The final frontier is leveraging these GPUs for general purpose compute and analytics, where MapD is at the forefront. Far from being a niche problem, these are the workloads that millions of analysts must complete on a daily basis.

MapD is a platform, not a point solution. The use cases are limited only by the imagination of our customers. Already MapD is being applied, in dozens of different ways, in business and government settings. For example, hedge funds seek tradeable insights into massive transaction datasets. Fleet managers optimize scheduling and routing, and lower maintenance costs, through analysis of telematics data. Utilities can better predict power supply usage from streams of smart meter data across the nation. Retailers explore for buyer insights in multi-year datasets that are so large they’ve literally never been analyzed before. Many customers build custom applications to leverage MapD’s querying and visual rendering engines.

Finally, it's always been my vision to make the power of our technology available to the many, not the few. While the explosive adoption of GPU computing is aiding that, we are also doing everything we can at MapD to accelerate access. We open sourced our Core SQL Engine in 2017, and just recently we launched MapD Cloud to coincide with NVIDIA GTC. Now any analyst or data scientist in the world has very affordable, zero-friction, access to MapD in the cloud. While we were proud, and excited, to be the first GPU-accelerated analytics SaaS offering, we are not resting. Many improvements and new innovations are just around the corner.

With the exponential adoption of GPUs in the data center, opportunities abound to redefine the limits of speed and scale in big data analytics.

Missed the presentation from NVIDIA GPU Technology Conference 2018? Watch it here.