Unlocking the Power of AWS Open Data with OmniSci

Try HeavyIQ Conversational Analytics on 400 million tweets

Download HEAVY.AI Free, a full-featured version available for use at no cost.

GET FREE LICENSENOTE: OMNISCI IS NOW HEAVY.AI

The Registry of Open Data on AWS has empowered laboratories, research institutions, and various other organizations to deliver open datasets to developers, startups, and enterprises worldwide since its launch in 2018.

Anyone can easily access the registry through a web interface and search for datasets with keywords or tags like flood risk, language, or human genome.

Users are encouraged to grow the adoption of the registry by contributing datasets of their own, usage examples, tutorials, or applications built on data from the registry.

To take full advantage of these robust datasets, users need an equally powerful exploratory analytics and visualization platform. OmniSci Free and Enterprise connect with the registry and enable users to ingest data from AWS S3 directly into the platform through the Immerse UI or omnisql.

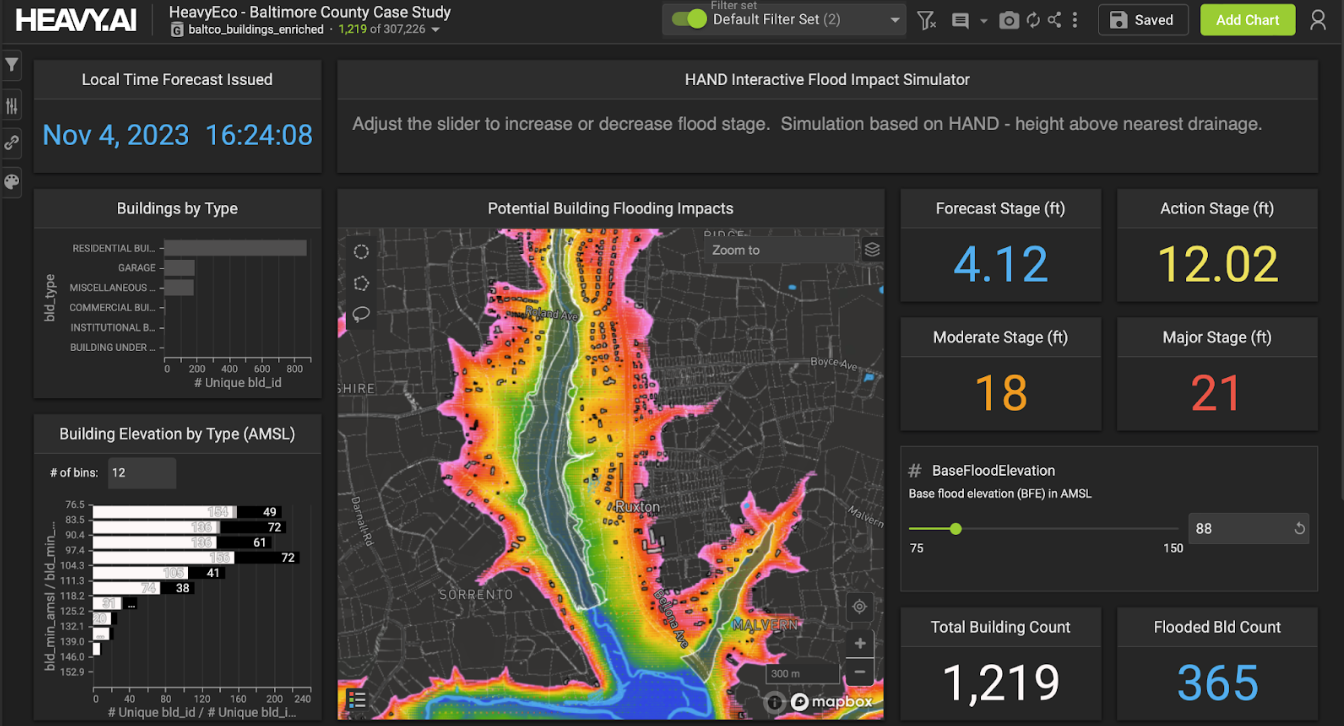

This post examines flood risk data listed on the Registry of Open Data on AWS and demonstrates how to use OmniSci's native AWS S3 ingestion paths to load data into OmniSci.

Access and Explore the Registry of Open Data on AWS

Suppose someone navigates to the registry and searches for flood risk. The lone result would be the First Street Foundation (FSF) Flood Risk Summary Statistics, a collection of CSVs that contain flood statistics for the 48 contiguous states at the congressional district, county, and zip code geography levels.

FSF set out to make flood risk transparent, easy to understand, informative, and available to everyone by sharing it through AWS. Opening the listing reveals its rich detail, and like every dataset on the registry, it includes:

- a detailed description of the data

- how to access the information

- a license

- a link to the documentation

- contact information

- usage examples

The AWS command line interface (CLI) access information included with the flood risk listing is an excellent way to view the contents of the S3 bucket and note the directory paths that Immerse and omnisql need to load data into OmniSci DB.

FSF's Flood Risk Summary Statistics tables have fascinating columns to explore and analyze. They provide calculations for the count and percentage of properties per standard geography at risk of flood now and projected to be in 2050, average risk scores for each geography, and differences between their calculations and FEMA's.

Ingest AWS S3 Data via Immerse UI

The easiest and most approachable way to load data into OmniSci from any AWS S3 bucket is the Immerse UI.

Two pieces of information are required to get started:

- The Region and Path for the file in the S3 bucket or the direct S3 Link.

- Use the AWS CLI access information identified in the previous section

- The Access Key and Secret Key for the IAM account in S3 if the data is private.

Locate the Data Manager tab on the top ribbon of Immerse and select Import Data.

From the Data Importer screen, select the Import data from Amazon S3 option.

Choose whether to import using the S3 Region, Bucket, and Path, or direct S3 Link. This example uses the former.

Input the following parameters into the corresponding boxes in Immerse and disable the Private Data checkbox:

OmniSci infers the column data types based on sampling and provides a preview of the table. It's best practice to review the data type selections and make modifications where necessary. Changing the county FIPS column from integer to dictionary encoded string is the only change needed in this example.

While not applicable in this case, there are other options to consider before finalizing the ingestion, such as:

- null string substitutions

- delimiter types

- header row inclusion

- existence of quoted strings

Give the table a name and click Save Table. When the process completes, a final table review screen shows the number of rows loaded, a count of rows rejected, and the table schema.

Ingest AWS S3 Data via Omnisql

Power users can use the command line and omnisql to load data into OmniSci from AWS S3 using the same parameters as the Immerse UI.

Create a new table to function as the target schema.

Jump over to the command line, launch omnisql, and connect to the database that houses the target table. This example uses the default omnisql directory path.

Load the target table using the COPY FROM command and the desired S3 path, and the WITH clause to specify the S3 credentials and region of the bucket. If the bucket is publicly accessible, like in this example, there will be no credentials to pass.

The query returns the records loaded, the number of rows rejected, and the ingestion runtime.

Try it out for yourself! Find a dataset you find compelling on the Registry of Open Data on AWS and use OmniSci's native AWS S3 ingestion paths to load data into your OmniSci instance.

If you seek some inspiration for your analysis, check out our presentation from ODSC, where we synthesize the First Street Foundation Flood Risk Summary Statistics and demographic information to explore vulnerabilities now and in the future.

Download OmniSci Free today! Share your data choices and any visualizations you create with us through LinkedIn, Twitter, or our Community Forums.